Flash News

Flash News

Gunfire in Durres, a 30-year-old man is injured

Accident on Arbri Street, car goes off the road, two injured

Arrests of "Bankers Petrolium", Prosecution provides details: Exported and sold 532 billion lek of oil, caused millions of euros in damage to the state

Ndahet nga jeta tragjikisht në moshën 28-vjeçare ylli i Liverpool, Diogo Jota

Posta e mëngjesit/ Me 2 rreshta: Çfarë pati rëndësi dje në Shqipëri

Germany is holding parliamentary elections on February 23, 2025. For multi-billionaire and Trump confidant Elon Musk, it is clear that the AfD, a party that is classified as partly right-wing extremist by the German Federal Office for the Protection of the Constitution, must win the parliamentary elections. Only the AfD can save Germany, Musk wrote on his website X.

He also offered AfD leader Alice Weidel the opportunity to hold a discussion on the X platform. The AfD is considered the most active party on German social media, especially on the Chinese social media platform TikTok. Videos with AfD positions are viewed on TikTok by hundreds of thousands of people.

According to political and communication expert Johannes Hillje, each AfD video was viewed an average of more than 430,000 times in 2022 and 2023. For comparison: in second place are the videos of the conservative parliamentary group CDU/CSU – with an average of around 90,000 views.

Does social media favor right-wing parties?

No, says Andreas Jungherr, professor of political science and digital transformation at Otto-Friedrich University in Bamberg. “The AfD has been active on social media for a long time.” It has learned what approach works there.

This is a clear advantage in terms of reach – but that alone does not guarantee electoral success, says Jungherr. This is clearly seen in the campaign of US presidential candidate Kamala Harris, who had great success on social media. But as we know, this was not enough to bring her to the White House.

What impact does social media have on values and beliefs?

So-called “filter bubbles” arise in the online space because search results or content are personalized. Algorithms of online service providers determine what is shown to us on the Internet. On social media, an algorithm gives priority to content from well-known personalities or content that has been liked or commented on by many other users. On the other hand, the algorithm may no longer display certain content at all if it has been ignored frequently.

This creates a one-sided perspective: one's worldview is reinforced, while the opinions or attitudes of others are ignored. Above all, such content reinforces the values and beliefs one already holds.

This is why media of all kinds have only a very small influence on voters' decisions, says Judith Möller, professor of communication sciences at the Leibniz Institute for Media Research.

She studies the effects of social media and says, “Voters’ decisions are related to many different factors. It depends on where and how you grew up, what personal experiences you had – especially in the last weeks before the election – or who else you talk to about elections and politics.” According to Möller, the same factors also influence which media we use and what effect they have.

Political movements and new parties can quickly become visible on social media. But it is essentially on social networks that they reach their followers – and perhaps some undecided people. “It is difficult to convince people of something new. Through the media you can only convince those who are already convinced of something.”

Fake news and hate speech

Dealing with fake news and information will become even more problematic in the future. Fake news is likely to increase if, as Marc Zuckerberg announced, the Meta concern will give up professional control and verification of news on the Facebook or Instagram platforms and will block controversial content less and less frequently.

In this context, we can observe two effects, says Prof. Dr. Nicole Krämer, head of the Department of Social Psychology, Media and Communication at the University of Duisburg-Essen. On the one hand, surveys show that people do not want to fall prey to disinformation.

“The more important an issue is to someone's life, the more adept he or she is at seeking out information that is truly helpful, that is, that is reliable and two-sided.”

But on the other hand, if the false information fits with something that is already embedded in someone's brain, the person may consider that information as possible – "even if they initially think: there's no way this can be," says Krämer.

Another mechanism is at work here: “the more often you hear, read, or see a false message, the more likely it is to remain in memory.” This means that false information sometimes takes root – despite the fact that people actually want to avoid it.

The amount of false information on social media could also increase because there are fewer and fewer different opinions there, says Judith Möller.

The reason for this is an increasingly toxic culture of conversation and discussion characterized by insults or hate speech. “As a result, certain groups are excluded from discussions, and only those who can withstand this toxic culture of conversation continue to participate.”/DW/

Latest news

Greece imposes fee to visit Santorini, how many euros tourists must pay

2025-07-03 20:50:37

Don't make fun of the highlanders, Elisa!

2025-07-03 20:43:43

Gunfire in Durres, a 30-year-old man is injured

2025-07-03 20:30:52

The recount in Fier cast doubt on the integrity of the vote

2025-07-03 20:09:03

Heatwave has left at least 9 dead this week in Europe

2025-07-03 19:00:01

Oil exploitation, Bankers accused of 20-year fraud scheme

2025-07-03 18:33:52

Three drinks that make you sweat less in the summer

2025-07-03 18:19:35

What we know so far about the deaths of Diogo Jota and his brother André Silva

2025-07-03 18:01:56

Another heat wave is expected to grip Europe

2025-07-03 17:10:58

Accident on Arbri Street, car goes off the road, two injured

2025-07-03 16:45:27

Accused of two murders, England says "NO" to Ilirjan Zeqaj's extradition

2025-07-03 16:25:05

Gaza rescue teams: Israeli forces killed 25 people, 12 in shelters

2025-07-03 15:08:43

Diddy's trial ends, producer denied bail

2025-07-03 15:02:41

Agricultural production costs are rising rapidly, 4.8% in 2024

2025-07-03 14:55:13

Warning signs of poor blood circulation

2025-07-03 14:49:47

Croatia recommends its citizens not to travel to Serbia

2025-07-03 14:31:19

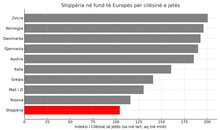

Berisha: Albania is the blackest stain in Europe for the export of emigrants

2025-07-03 14:20:19

'Ministry of Smoke': Activists Blame Government for Wasteland Fires

2025-07-03 13:59:09

AFF message of condolences for the tragic loss of Diogo Jota and his brother

2025-07-03 13:41:36

Five healthy foods you should add to your diet

2025-07-03 13:30:19

A unique summer season, full of rhythm and rewards for Credins bank customers!

2025-07-03 12:12:20

Fire situation in the country, 29 fires reported in 24 hours

2025-07-03 12:00:04

The constitution of the Kosovo Assembly fails for the 41st time

2025-07-03 11:59:57

The gendering of politics

2025-07-03 11:48:36

The price we pay after the "elections"

2025-07-03 11:25:39

Xhafa: The fire at the Elbasan landfill was deliberately lit to destroy evidence

2025-07-03 11:08:43

The 3 zodiac signs that will have financial growth during July

2025-07-03 10:48:01

Democratic MP talks about the incinerator, Spiropali turns off her microphone

2025-07-03 10:39:24

Ndahet nga jeta tragjikisht në moshën 28-vjeçare ylli i Liverpool, Diogo Jota

2025-07-03 10:21:03

Cocaine trafficking network in Greece, including Albanians, uncovered

2025-07-03 10:10:12

Korreshi: Election manipulation began long before the voting date

2025-07-03 09:39:13

Arrest of Greek customs officer 'paralyzes' vehicle traffic at Qafë Botë

2025-07-03 09:28:41

After Tirana and Fier, the boxes are opened in Durrës today

2025-07-03 09:21:10

Enea Mihaj transfers to the USA, will play as an opponent of Messi and Uzun

2025-07-03 09:10:04

Foreign exchange, the rate at which foreign currencies are sold and bought

2025-07-03 08:53:50

Index, Albania has the worst quality of life in Europe

2025-07-03 08:48:10

Horoscope, what do the stars have in store for you today?

2025-07-03 08:17:05

Clear weather and high temperatures, here's the forecast for this Thursday

2025-07-03 08:00:37

Posta e mëngjesit/ Me 2 rreshta: Çfarë pati rëndësi dje në Shqipëri

2025-07-03 07:46:48

Lufta në Gaza/ Pse Netanyahu do vetëm një armëpushim 60-ditor, jo të përhershëm?

2025-07-02 21:56:08

US suspends some military aid to Ukraine

2025-07-02 21:40:55

Methadone shortage, users return to heroin: We steal to buy it

2025-07-02 20:57:35

Government enters oil market, Rama: New price for consumers

2025-07-02 20:43:30

WHO calls for 50% price hike for tobacco, alcohol and sugary drinks

2025-07-02 20:41:53