Flash News

Flash News

An elderly woman in Lushnja dies after being stabbed in the neighborhood ambulance

After Tirana, KAS also decides to open the ballot boxes in Dibër

The murder saga, Alibej: It started after I gave Talo Çela's location

Albania-Serbia match/ Tirana in "total lockdown": 2000 police officers, anti-drone checks and traffic restrictions

Etel Haxhiaj challenges US police during arrest of immigrant, charged with assault

The administration of President Joe Biden is ready to open a new front in the effort to protect the Artificial Intelligence (AI) of the United States from China and Russia. Its initial plans are to put safeguards around the most advanced AI models. Government and private sector researchers worry that US adversaries could use these models, which mine vast amounts of text and images to gather information and produce content, to launch aggressive cyber attacks, or even to create powerful biological weapons.

Below you can read some of the threats from AI:

Manipulated material, fabricated videos that look real, created by AI algorithms from the footage that abounds online, are surfacing on social media and blurring fact and fiction in the polarized world of American politics. While such artificial videos have been around for a few years now, they've become much more powerful in the last year thanks to a slew of new "generative AI" tools like Midjourney that make it easier and less expensive to create persuasive manipulated materials.

AI visualization tools from companies such as OpenAI and Microsoft can be used to produce images that can promote elections, or disinformation about elections, despite each having policies against creating disinformation content. the researchers said in a report published in March. Some disinformation campaigns simply use AI's ability to mimic genuine news articles as a tool to spread false information. Some major platforms in social networks, such as Facebook, X and YouTube, have made efforts to ban and delete manipulated materials.

For example, last year, a Chinese government-controlled news portal that uses an AI platform published a false story that had previously circulated that the United States runs a laboratory in Kazakhstan for developing biological weapons for use against China, the US Department of Homeland Security said in a 2024 national security threat assessment. White House national security adviser Jake Sullivan speaking at an AI event on Wednesday in Washington, said that this problem cannot be easily solved because it is a combination of AI capabilities with "the intention of state and non-state actors to use disinformation, to subvert democracies, to advance propaganda and to change the perception in the world".

The US intelligence community, research groups, and academics are deeply concerned about the dangers posed by foreign bad actors gaining access to AI capabilities. Researchers at Gryphon Scientific and Rand Corporation noted that advanced AI models could provide information that could be useful for the creation of biological weapons. Gryphon analyzed how large language models (LLMs) – computer programs that pull in large amounts of text to generate answers to questions – could be used by hostile actors to cause damage in the life sciences domain, and found that “ they can provide information that can assist a malicious actor in creating a nuclear weapon, providing useful, accurate and detailed information every step of the way."

The researchers found, for example, that an LLM can provide PhD-level problem-solving knowledge when working with a spreading virus. Their research also found that LLMs can also help plan and execute a biological attack. The US Department of Homeland Security (DHS) said that cyber actors would likely use AI to “develop new tools” to “enable larger, faster, more efficient and more obfuscated cyber attacks ” against vital infrastructure, including against pipelines and railways.

China and other adversaries are developing AI technology that could undermine US cyber defenses, including generative AI programs that support malware attacks, DASK said. Microsoft said in a report in February that it had tracked hacker groups linked to the Chinese and North Korean governments, as well as Russia's military intelligence and Iran's Revolutionary Guard. They were trying to perfect their hacking campaigns using MLL. A bipartisan group of lawmakers announced a bill late Wednesday that would make it easier for the Biden administration to impose controls on the export of AI designs in an effort to protect valuable American technology against foreign actors. bad ones.

The bill, sponsored by House Republicans Michael McCaul and John Molenaar and Democrats Raja Krishnamoorthi and Susan Wild, would also give the Commerce Department authority to bar Americans from working with foreigners to develop AI systems which pose risks to the national security of the USA./ REL

Latest news

Uzbekistan qualifies for the World Cup for the first time

2025-06-05 21:14:35

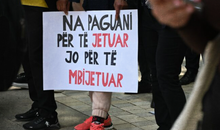

Index: Albania among countries that consistently violate workers' rights

2025-06-05 20:53:35

Accident in Burrel, two vehicles collide, 6 injured

2025-06-05 20:31:27

Discover foods that help you relieve stress

2025-06-05 20:16:34

Kalaja: I have a video where votes were taken from the DP and given to the PS

2025-06-05 19:58:04

Berisha: The international community does not accept the farce

2025-06-05 18:57:18

Trump after conversation with Xi: US and China will resume trade talks

2025-06-05 18:35:36

Berisha on May 11: 28 MPs were under the patronage of drug cartels

2025-06-05 18:15:44

The session in the Assembly closes, the majority approves the draft laws alone

2025-06-05 17:55:21

Kurban Bajrami, kreu i Komunitetit Mysliman të Shqipërisë uron besimtarët

2025-06-05 17:29:17

Media at OSCE conference: Organized crime has captured the Albanian state

2025-06-05 16:50:41

Car hits 75-year-old man at white lines in Vlora

2025-06-05 16:42:48

Protest in Spaç, after restoration interventions

2025-06-05 16:27:49

Photo/ Concrete mixer falls into abyss in Ulëz, driver rushed to hospital

2025-06-05 16:15:15

May 11/ Këlliçi: There are attempts to influence the final OSCE-ODIHR report

2025-06-05 16:06:38

Immigration is emptying schools and universities

2025-06-05 15:53:38

Plague breaks out, Kosovo bans import of sheep and goats from Shkodra and Kukësi

2025-06-05 15:48:23

After Tirana, KAS also decides to open the ballot boxes in Dibër

2025-06-05 15:31:03

Tirana is "paralyzed" again, here are the roads that will be blocked tomorrow

2025-06-05 15:20:12

Serbia is coming to Albania tomorrow, here's where it will be accommodated

2025-06-05 14:24:43

US Embassy updates visa appointment system: More flexibility for applicants

2025-06-05 14:21:01

Drug trafficking with "tentacles" in Europe, GJKKO seals prison for 9 arrested

2025-06-05 13:39:35

Deserting from the socialist ranks, Erion Braçe "becomes" a Democrat

2025-06-05 13:23:49

May 11 elections, KAS decides on a full recount of votes in Tirana

2025-06-05 13:22:28

Clashes in the Parliament/ Elisa Spiropali expels Flamur Noka from the session

2025-06-05 13:04:42

A Girl, Otherwise, A Boy

2025-06-05 12:57:30

Accident at the "Albchrome" factory in Elbasan, three people arrested

2025-06-05 12:54:40

Berisha: The law of farce is that the dictator's votes are always increasing

2025-06-05 12:19:16

The murder saga, Alibej: It started after I gave Talo Çela's location

2025-06-05 11:45:27

Muslims celebrate Eid al-Adha, KMSH announces where prayers will be held

2025-06-05 11:24:50

Bardhi: The EU delegation said that crime controlled the elections in Elbasan!

2025-06-05 11:16:51

Journalists were censored by Spiropali, AGSH: Fraud and institutional propaganda

2025-06-05 11:00:20

Noka-majority: The foundations of your power rest on the bought vote

2025-06-05 10:54:00

Analysis: Peace between Russia and Ukraine, further away than it seemed

2025-06-05 10:51:57

Serious in Austria/ 35-year-old Albanian dies at work

2025-06-05 10:21:00

They produced and sold cannabis, 2 brothers arrested

2025-06-05 09:50:30

Guard employee commits suicide with service weapon

2025-06-05 09:15:00

Accident in Fier/ Car collides with an agricultural vehicle, 3 people injured

2025-06-05 09:08:55

Parashikimi i motit për sot

2025-06-05 08:31:59

HOROSCOPE/ Here's what the stars have predicted for each sign

2025-06-05 08:16:17

Morning Post/ In 2 lines: What mattered yesterday in Albania

2025-06-05 07:52:36

Video/ Abin Kurti narrowly escapes, almost falling down the stairs

2025-06-04 22:54:08

The first case of small cattle plague in the country is confirmed

2025-06-04 22:36:54

Blushi: The person who kidnapped Meta became police chief

2025-06-04 21:45:24

"Fraud" with the forgiveness of State Police fines!

2025-06-04 21:15:22

"The real reason why young Albanians like me are coming to the UK illegally"

2025-06-04 20:07:39

Government opens a legal path for investments in Army properties

2025-06-04 19:49:25

Elderly woman forgets stove on, house burns down in Vlora

2025-06-04 19:46:42

Ersekë/ Elderly man struck by lightning, dies on the spot

2025-06-04 19:12:55

Trump calls Putin, warns Ukraine that Russia will respond to attack on airbases

2025-06-04 19:05:56

Zelensky's Chief of Staff Meets with Secretary Rubio in Washington

2025-06-04 18:53:37

Rama opens a legal "path" for investments in Army properties

2025-06-04 18:41:33

How to think like a Stoic

2025-06-04 18:19:02

May 11th Elections/Balliu: The European Parliament condemned the electoral farce

2025-06-04 18:05:14

Tirana Pyramid “symbol of exclusion” for people with disabilities

2025-06-04 17:32:27

SPAK sends Evis Berberi, Belinda Balluku's right-hand man, for trial

2025-06-04 16:39:23

Reporting to the Parliament of independent institutions postponed indefinitely

2025-06-04 16:11:48

Turkish court jails five mayors of largest opposition party

2025-06-04 15:56:26

KAS vendos hapjen e 229 kutive të materialeve zgjedhore të Qarkut Vlorë

2025-06-04 15:04:47